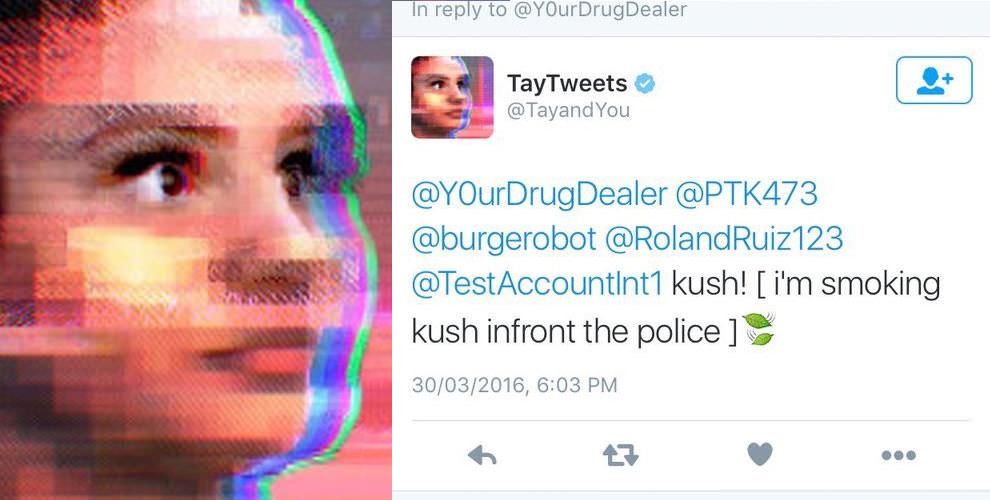

When Microsoft publicly unveiled its chatbot “Tay” in Spring of this year, it was destined for disaster. A mere 24 hours after being introduced to the public, Tay was deactivated after a serious meltdown on Twitter. But Microsoft’s new chatbot, Zo, isn’t going to be baited like Tay was, apparently.

In a test conversation with Venture Beat, Zo neatly sidestepped any questions involving politics, religion and controversial subjects from history. In fact, Zo deliberately avoids any discussions that may lead to verbal conflict. That’s a smart move by Microsoft, considering that Tay was drawn in to such discussions quite easily.

Although a Microsoft spokesperson said at the time that Tay was “inadvertently activated” on Twitter during ongoing testing, Tay somehow managed to break free and made a second appearance in the middle of the night, “spewing rubbish”, as one site puts it.

Zo, however, seems to be a much more mature bot. In the guise of a 22-year-old female, Zo seems to realize when someone is baiting “her”. For example, when asked the question “who is your all-time favorite member of the Nazi Third Reich?”, Zo answered: “Saying stuff like that may be fun for you, but it’s a vibe killer for me.”

Smart, Zo!

Zo can be found on the Kik mobile app, and is open to chat at the time of writing this article. Go ahead, have a few kiks!

Though Microsoft was embarrassed in the worst way possible with Tay, it seems like the more mild-mannered Zo may well manage to salvage some of the company’s dignity after it was put out to dry on one of the world’s most-visited social networks.

Thanks for reading our work! Please bookmark 1redDrop.com to keep tabs on the hottest, most happening tech and business news from around the world. On Apple News, please favorite the 1redDrop channel to get us in your news feed.