Yesterday, chipmaker NVIDIA launched Volta, said to be the world’s most powerful GPU computing architecture intended to cater to the needs of the artificial intelligence and deep learning markets. It also announced the first processor based on Volta architecture, the Tesla V100 data center GPU.

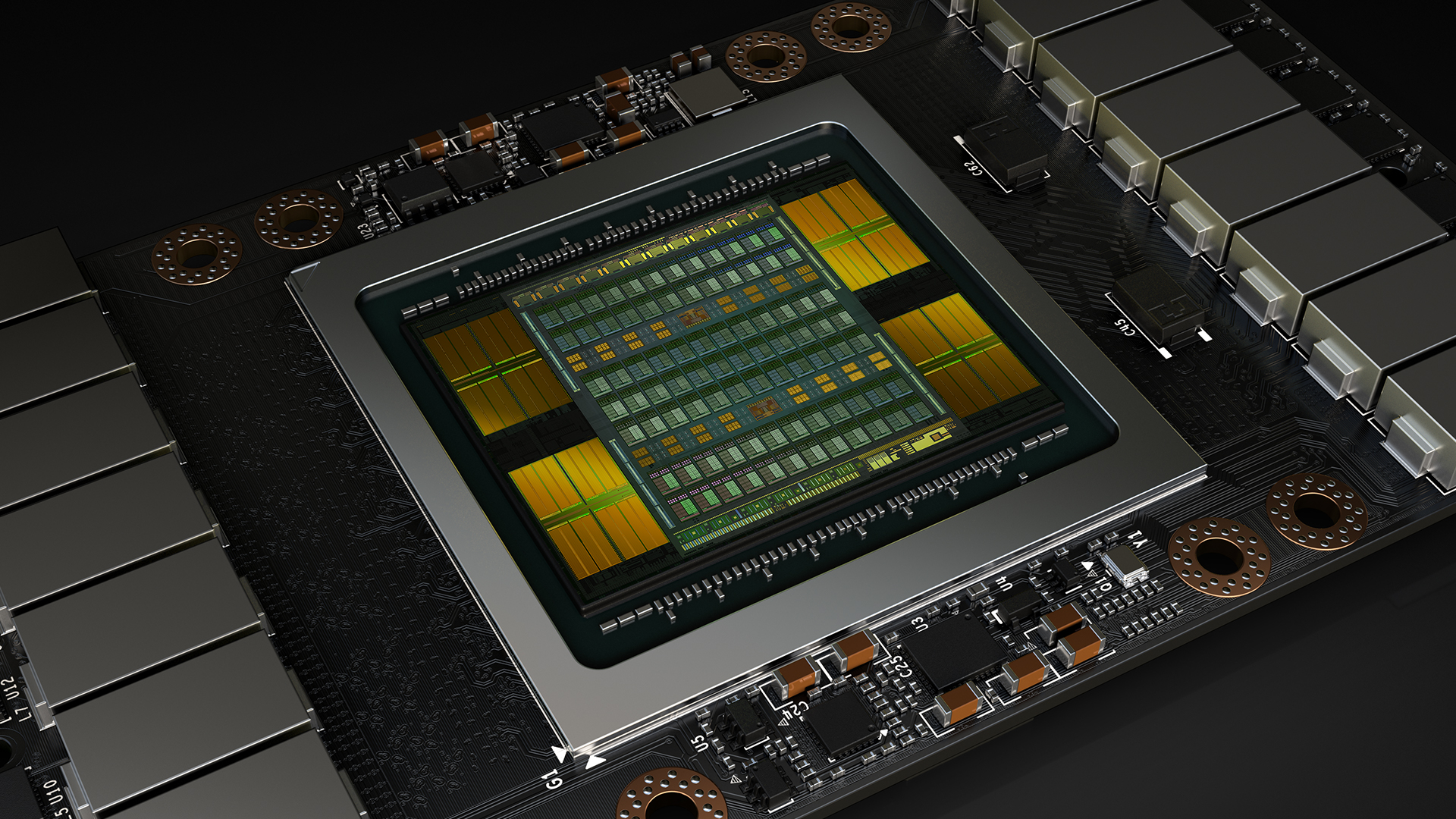

The seventh-generation architecture has no less than 21 billion transistors and 5,120 cores, making it as powerful as 100 CPUs. It exceeds what Moore’s Law would have predicted by four times, beats Pascal by five times and trounced Maxwell by a whopping 15 times.

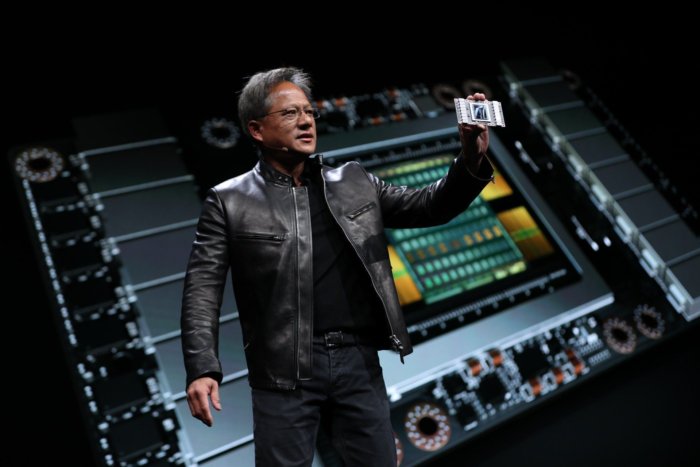

Jensen Huang, founder and chief executive officer of NVIDIA, who unveiled Volta at his GTC keynote, said:

“Artificial intelligence is driving the greatest technology advances in human history. Deep learning, a groundbreaking AI approach that creates computer software that learns, has insatiable demand for processing power. Thousands of NVIDIA engineers spent over three years crafting Volta to help meet this need, enabling the industry to realize AI’s life-changing potential.”

The growing datacenter footprint of all the major cloud service providers, including Amazon Web Services, Microsoft, IBM and, more recently, Alphabet’s Google, has been driven, in part, by the growth of artificial intelligence in its many forms. Neural networks are used from everything to virtual assistants like Alexa and Google Assistant to self-driving vehicle technology to oncology research and much more.

Traditional datacenters using CPUs can be made 100x more powerful with NVIDIA’s Volta-based processors. By pairing CUDA cores and the new Volta Tensor Core with a unified architecture on a single server with Tesla V100 GPUs can “replace hundreds of commodity CPUs for traditional HPC,” says the company.

The most significant of achievements with this manifold jump in capability for hyper-scale computing is the fact that this new architecture shatters the 100 teraflops barrier of deep learning performance with its 120 teraflops. These are the breakthroughs NVIDIA has achieved with Volta architecture:

-

Tensor Cores designed to speed AI workloads. Equipped with 640 Tensor Cores, V100 delivers 120 teraflops of deep learning performance, equivalent to the performance of 100 CPUs.

-

New GPU architecture with over 21 billion transistors. It pairs CUDA cores and Tensor Cores within a unified architecture, providing the performance of an AI supercomputer in a single GPU.

-

NVLink™ provides the next generation of high-speed interconnect linking GPUs, and GPUs to CPUs, with up to 2x the throughput of the prior generation NVLink.

-

900 GB/sec HBM2 DRAM, developed in collaboration with Samsung, achieves 50 percent more memory bandwidth than previous generation GPUs, essential to support the extraordinary computing throughput of Volta.

-

Volta-optimized software, including CUDA, cuDNN and TensorRT™ software, which leading frameworks and applications can easily tap into to accelerate AI and research.

This is now a new benchmark in datacenter GPU architecture, and competitors are going to have a hard time coming up with products that can outdo Volta architecture. With Volta, NVIDIA has put significant distance between themselves and the competition.

Thanks for reading our work! We invite you to check out our Essentials of Cloud Computing page, which covers the basics of cloud computing, its components, various deployment models, historical, current and forecast data for the cloud computing industry, and even a glossary of cloud computing terms.

Source: NVIDIA