Renowned theoretical physicist, author and cosmologist Stephen William Hawking has once again warned the world about the future of artificial intelligence and the effects it could have on humanity as a whole. Talking to Wired magazine, Hawking said AI would soon reach a stage where it would outperform humans in all fields.

Tesla Motors and SpaceX CEO Elon Musk has been of the same opinion, as has Microsoft founder and ex-CEO Bill Gates.

What do these icons of our time know that the general public is unaware of? Or are they collectively envisioning the worst case scenario just to warn people of a possible cataclysm in our future?

On the AI front, Hawking believes that someone will create an artificially intelligent program that is capable of replicating itself, which will give rise to a “new form of life” that outperforms human beings. His solution? Start a new space program to keep the brilliant young minds of our time occupied with solving humanity’s problems.

Earlier this year, at the opening of Cambridge University’s new AI research facility in London, Stephen Hawking made similar statements, saying that artificial intelligence could bring as many dangers to the human race as it does benefits.

But are these doomsday oracles likely to be proved right, or is it an overly pessimistic view of the future?

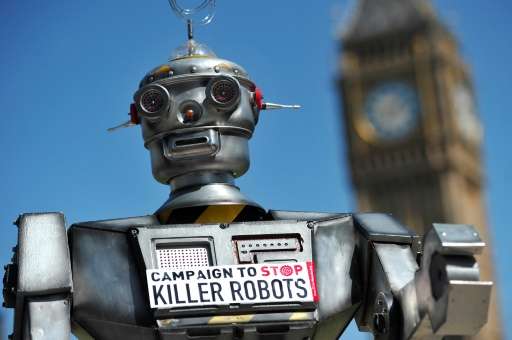

On the one hand, there’s an inherent fear of new technologies that resides in most people not familiar with various forms of those technologies. On the other, some robotics experts believe that the fear of robots arises from humans fearing each other, and how they might use machines and robots against each other. In other words, the fear is not of the technology itself, but of how new technologies can be misused by bad actors against other humans.

And when you look at it from that second perspective, what Hawking, Gates and Musk have been saying make a lot of sense. There will always be malicious actors in our midst, and when they get their hands on technology that can be turned into malicious autonomous entities, that’s where the real danger to our future safety and security lies.

As an example, let’s say that the U.S. military is working on a top secret experiment to create autonomous unmanned bombers that can carry nuclear warheads. Now, even though the project has several intrinsic risks and dangers, the real danger comes from who is programming these systems. Autonomy doesn’t happen on its own – a machine has to be taught what to do before it can grow and start learning on its own. And that’s where human control plays a vital role because the early point is where a set of “ethics” can be built into the system before it goes truly autonomous.

But, as I said, that’s exactly where the danger lurks.

The development of AI needs proper ethical direction, and that’s what we’re lacking at this point. The field is relatively new, and rapidly developing AI technologies are being made easily available and accessible to everyone, and that could be the real risk here.

I don’t presume to know what will happen in the future, whether AI robots and autonomous killing machines will run rampant through city streets in Terminator-esque fashion. But we cannot ignore the warnings being given to us by some of the most inspired minds of our times.

Thanks for visiting. Please support 1redDrop on social media: Facebook | Twitter