Though Tesla has been working on its own AI chip for a while, no one really knew when Tesla will replace NVIDIA chips with its own. That date seems to be closer than ever.

Elon Musk went to great lengths to explain why Tesla wanted to design its own chips. During the second quarter earnings call he said, “the key is to be able to run the neural net at a bare metal level so that it’s especially doing the calculations in the circuits itself and not in some sort of emulation mode which is how a GPU or a CPU would operate.”

“Our current hardware, which – I’m a big fan of NVIDIA, they do great stuff. But

using a GPU, fundamentally it’s an emulation mode, and then you also get choked on the

bus. So, the transfer between the GPU and the CPU ends up being one that constrains

the system.”

Simpy put, Elon Musk wants to blur the distance between GPU and the CPU and localize data processing. NVIDIA has been focusing on GPU accelerated computing, but Tesla feels that it may not be enough to handle localized workload for the Tesla computer that needs to run autonomous operations.

Elon Musk gave more details on the power of Tesla AI chips. According to Musk, “whereas the current hardware can do 200 frames a second, this is able to do over 2,000 frames a second and with full redundancy and fail-over. ”

It’s a huge statement and one that a lot of critics will not be ready to believe. But Tesla had something cooking under its hood for a while now.

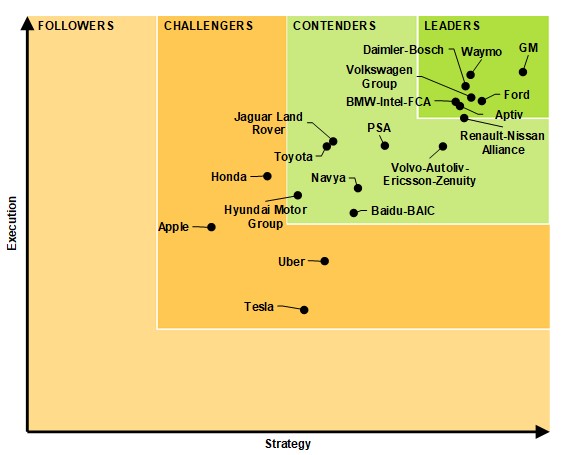

Hardware redundancy is one area where Tesla was not up there with the best according to Navigant, a consulting group that ranks Autonomous driving tech companies.

In addition to lacking lidar, Tesla cars likely won’t be able to drive without human intervention, since they don’t have technology installed to keep cameras and sensors clean in inclement weather or a dusty environment, concludes Navigant.

“We’ve had winter weather up here in Michigan, and when that stuff gets kicked up onto radar sensors, those sensors can’t see and the system can’t function,” said Navigant’s Abuelsamid.

Tesla isn’t building in levels of hardware redundancy into Autopilot, said Abuelsamid. Many of the leading automated driving companies, like GM, are using backup computing platforms, opting for two or even three computing systems. – GreenTechMedia

Navigant ranked Tesla dead-last in its assessment of strategy and execution for 19 companies developing Automated Driving Systems.

Now Tesla has turned everything on its head. The company is saying that, not only its processing power will increase ten fold, the computations will be extremely localized with full redundancy and fail-over.

Why Does it Matter?

Full autonomous driving will only be possible when cars are able to drive themselves irrespective of weather and on all terrains. More importantly, they need to hold things on their own even when they are disconnected from the internet.

The only way to achieve that is by making the AI computer sitting inside the car self-sufficient, doing all the processing right next to the point of data generation and connecting to the mother-ship only to get updates. This will tremendously increase the workload of on-board systems, and according to Tesla the current chips are good but not good enough to handle such workloads.

So Tesla wants to build them from the ground up.