Whether we like it or not, we are now officially living in the age of artificial intelligence. After centuries, even millennia, of dreaming about automatons and smart machines, we are now seeing a torrent of real-life applications in the form of self-driving technology, thinking robots and intelligent products that are rapidly approaching what is known as The Singularity – the moment when machines are able to do everything a human being can.

We’re not there yet, but some experts estimate that we could reach that point in the next five, ten or twenty years, although others think it will be more like fifty years.

What is Artificial Intelligence or AI?

To understand artificial intelligence or AI, we must first know about natural intelligence, a primarily human attribute. Most animals have it, too, in varying degrees, but it is highly limited in all but a few living beings. Plants have it too, although not in the conventional sense that humans understand.

In its basic form, natural intelligence is the ability to logically think through a process and react appropriately to a situation. It is not to be confused with reflexive or reactive behavior, which is completely different not only in terms of the physiology of the process, but also how it has evolved over the course of life on planet earth.

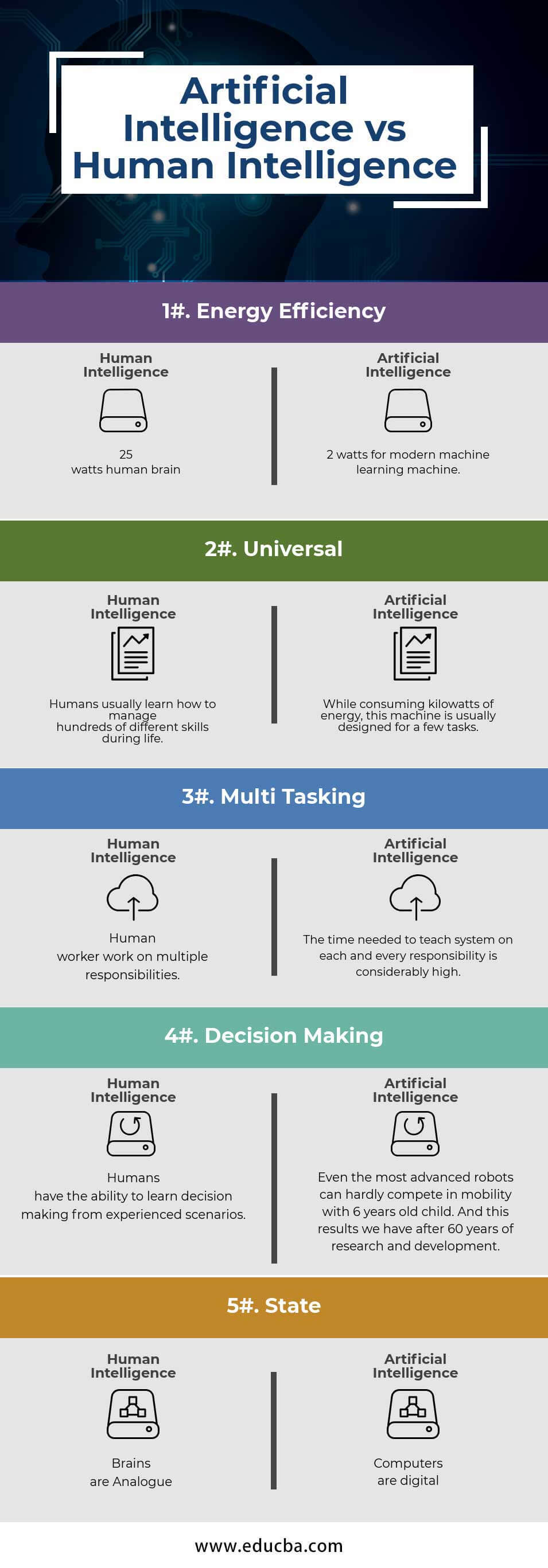

Interestingly, natural intelligence is believed to be the opposite of artificial intelligence. Even if you don’t subscribe to that belief, it’s undeniable that there are crucial differences between the two – at least, at this point in the evolution of AI.

Despite the many differences, it is equally true that artificial intelligence is typically modeled on natural intelligence and, specifically, human intelligence.

Researchers at IBM have been working with Google’s DeepMind on something called an attention function, which works in much the same way the human brain does. What it does is to let the neural network know what’s important and what to pay attention to. That means working with a much smaller data set than machine learning algorithms typically employ.

In other words, the attention system dramatically cuts the amount of data that the neural network has to access, and it does that by learning where to look for that data rather than going through the entire data set every time.

And that essentially brings us to the different types of AI and what they’re used for.

Types of Artificial Intelligence

Although there are dozens of variations on each of these streams of AI study, there are essentially four types of artificial intelligence: Reactive Machines, Limited Memory, Theory of Mind and Self-Awareness.

Think of this as an evolutionary hierarchy, with the first two being the bulk of mainstream AI applications, while the second two is where we want to be in the next twenty years or so.

Reactive Machines

Famous names in this category include IBM’s Deep Blue and Google’s AlphaGo. They lack memories and the ability to draw from past experiences, but they’re extremely good at reacting in predefined situations that have fixed variables.

To put it simply, they can beat a world champion at chess and other strategy games, but they cannot conceptualize real-world situations. In fact, when Deep Blue is presented with a chess situation, it will only draw on what it currently sees and, based on that, calculate the best move for a favored outcome.

Some AI notables like Rodney Brooks, Panasonic Professor of Robotics (emeritus) at MIT, believe that only these types of machines should be built in the future because humans lack the ability to program accurate simulations of real-world elements, or what are called representations. In a way, he may be right, because it makes AI systems less prone to unpredictable behavior.

“They can’t interactively participate in the world, the way we imagine AI systems one day might. Instead, these machines will behave exactly the same way every time they encounter the same situation. This can be very good for ensuring an AI system is trustworthy: You want your autonomous car to be a reliable driver. But it’s bad if we want machines to truly engage with, and respond to, the world. These simplest AI systems won’t ever be bored, or interested, or sad.”

Limited Memory

This group of machines can draw from their past in present situations. Take autonomous or self-driving cars as an example. The representations in this case are predefined and based on real-world components, such as lane markings or road signs. Over time, these machines make observations that are then included in these representations of the world. However, such representations are often temporary, and they’re used when required, like when the car is trying to make a decision to change lanes to avoid an accident.

Such Type II machines do not have the ability to built full representations of the real world like humans can, and really, this is the challenge of bridging the gap between basic AI and real human-like behavior.

Theory of Mind

The difference between this class of machines, Type III, and more basic types is that these machines not only form functional representations of the world, but also of other entities. This is the key to human social behavior. We understand that other people and even objects have a mind of their own, or representations of their own, and take that into considerations when making decisions.

This is probably the greatest hurdle at this point in time. Machines today – no matter how “smart” they appear to be – are still either reactive or limited in memory. They can’t form associations or representations based on experiences collected throughout their existence. In some ways, this is what defines human beings and allows us to build and maintain civilized societies.

One of the key points of this type of machine is that it needs to understand that others perceive things differently than itself. A famous experiment called The Sally Anne Task highlights this ability:

“In the experiment, children were presented with two dolls, Sally (who has a basket) and Anne (who has a box). Sally puts a marble in her basket, and leaves the room. While Sally is away, Anne takes the marble from the basket, and hides it in her box. Finally, Sally returns to the room, and the child is asked three questions:

-

Where will Sally look for her marble? (The “belief” question)

-

Where is the marble really? (The “reality” question)

-

Where was the marble at the beginning? (The “memory” question)

“The critical question is the belief question – if children answer this by pointing to the basket, then they have shown an appreciation that Sally’s understanding of the world doesn’t reflect the actual state of affairs. If they instead point to the box, then they fail the task, arguably because they haven’t taken into account that they possess knowledge that Sally doesn’t have access to. The reality and memory questions essentially serve as control conditions; if either of these are answered incorrectly, then it might suggest that the child didn’t quite understand what was going on.

The general belief is that children develop this theory of mind at around age four. Type III machines should exhibit this type of understanding, but we aren’t there yet.

Self-awareness

This is the highest class of intelligent machines, and some believe that we won’t even get there in the next twenty years. In many ways these Type IV machines are considered to be an extension of Type III, where consciousness is developed. But the awareness of that consciousness is the highest form of artificial intelligence. This ability gives us the power to be aware of not only our consciousness, but that of others. This is where human traits like empathy would be relevant.

A self-aware machine must be able to ingrain itself into human society and learn from experience. It should get “wiser” as it matures, gaining deeper and deeper understanding of not only itself, but others as well. This highly complex type of human behavior has never been emulated in a machine, and therein lies the big gap for AI researchers. They first have to master the first two types of AI before they can think of building Type III and Type IV machines.

And it’s not for want of trying that we haven’t achieved that yet. There are research projects all over the world that are seeking to cross that bridge and create a machine that can form its own representations of the world, interact with humans on the same level and do things that an average human takes for granted.

Are we even close to achieving truly human-like artificial intelligence? Yes and no. There are machines that can do things far better than humans, like working with numbers or other logical concepts. It’s the abstract that stumps them.

But humans are known for their persistence if anything. We will stop at nothing until we achieve the highest level of artificial intelligence. The question is, will that be a good thing for humanity considering the fact that humanity itself is a mix of the good, the bad and the ugly? That’s something to think about.