Smartphones are getting bigger, creeping into tablet territory with each passing year. Even Apple has recognized this need and is making larger screen sizes available. But as the form factor evolves, there’s something else inevitably making its way into every hand-held device on the market: Artificial Intelligence, or AI.

AI is not new to smartphones. We’ve had Siri for several years now, and Google Assistant, Cortana and Alexa are now making their way into the bulk of non-Apple devices.

We talk about smart homes, smart cars and smart offices, but what is the one thing we carry around wherever we go? No, it’s not your credit card. It’s your phone. Some people can’t even go to the bathroom without updating their Facebook status.

Smartphones aren’t really smart yet. Yes, they can access voice-enabled services, there are intelligent apps for various purposes and so on. But there’s still some intelligence quotient lacking on the OS front. Android is not an intelligent operating system. Google claimed that Android 9.0 Pie is “powered by AI”, but what does that really mean?

Android Pie comes with adaptive and smart features that are the first layer of AI, so to speak. It is limited to reactive features that first learn a user’s preferences and then tweak notifications, message replies, screen brightness, etc. based on these dynamic choices. The real AI has to come from within.

On the hardware side, companies like ARM are experimenting with Arm DynamIQ microarchitecture for more intelligent mobile solutions. Qualcomm is already in its third-generation intelligent mobile platform with the Snapdragon™ 845 processor designed for more intelligent voice, image and audio processing for VR and AI applications.

NVIDIA, Intel and all the other chipmakers of the world are now racing to create AI platforms for mobile devices and IoT products. There are also dozens of startups in this space because we’ll only just scratching the surface.

So, why aren’t smartphones of today a lot more intelligent than, say, five years ago? Why isn’t there a phone yet that can call in sick for you at the office? Why can’t my phone give me quick-response voice options to brush off unwanted callers? There’s a good reason for that.

First of all, artificial intelligence has to be applied at various levels in a smartphone: the hardware (silicon, sensors, etc.), the operating system and the apps. Because these are made or written by different companies, they don’t always play nice with each other. Typical!

That being said, companies like Google and Microsoft have realized that not having hardware under their umbrella of influence was a major disadvantage, hence Surface and Pixel. These are now strong divisions within these companies, making their mark on the devices market. By emulating Apple and owning both the hardware and the software (OS), both Google and Microsoft can make products that are better suited for AI tasks.

However, there’s a second challenge: processing power. To show you what that challenge looks like, take a look at IBM’s 14nm AI accelerator chip that can process an amazing 12 trillion operations per second at 2-bit precision. It also circumvents data flow bottlenecks around the chip using a “customized” data flow system and a “scratch pad” chip memory rather than the traditional cache used for CPUs and GPUs.

The problem is, although the architecture and capabilities make it small enough for mobile devices, it is by no means a mainstream product. Other companies are trying to get there, but there are limitations to chip size for mobile devices like smartphones.

That’s why it makes sense for companies like Apple and Google to make their own processors as well. Microsoft was left behind because of its relationship with Intel, but even Microsoft is now after ARM architecture over traditional x86 instruction set chip architecture that is the staple of Intel.

Their dream of Windows 10 on ARM might still yield results in the coming years. Maybe not in the form of the Surface Phone we’ve all been expecting, but definitely something that will bridge the gap between desktop and mobile.

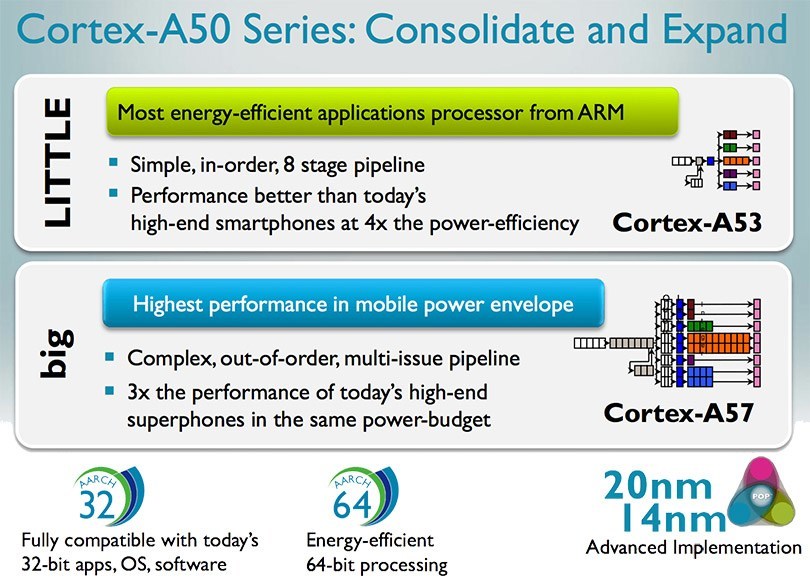

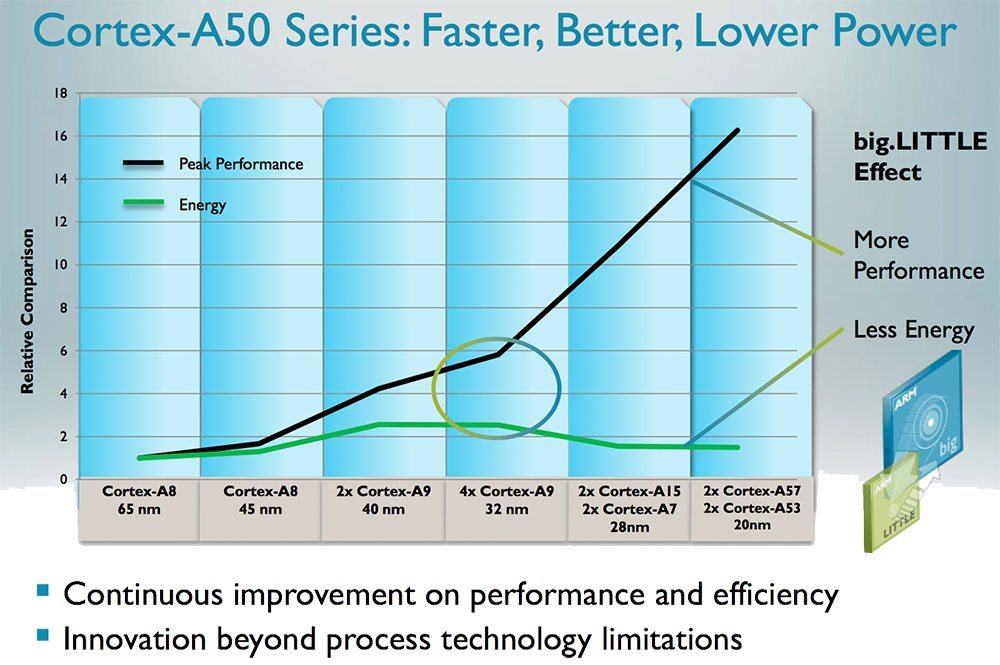

The third major challenge is battery power, and it’s sort of linked to processing power. The ARM Cortex-A50 Series chip is one of the few microprocessors that focus on low-power, high-performance mobile computing.

ARM’s big.LITTLE processing technology is, again, an emergent concept not yet ready to go into mainstream products. For one, it’s made up of heterogenous cores, which means the underlying operating system needs to know this and assign tasks to the appropriate core.

Conventional OSes don’t do that, so ARM had to design the big.LITTLE MP scheduler for a Linux kernel that could be tweaked to do exactly that. Further advancements are possible with different big.LITTLE configurations, but again, none of this is ready for the limelight even though these microprocessors can utilize less energy even at peak performance.

So, there are three key hurdles to be crossed before we can see some really intelligent smartphones.

One thing we cannot ignore is that we’re on the verge of something huge: a major disruption of the smartphone industry is in the offing that will eventually birth a new generation of intelligent devices that are more personal assistant than personal confidante.

How can we be sure it will happen?

One clue is that all the hardware and software bits are slowly being aligned to an inevitable AI-driven future. Market demand is probably the biggest driver of innovation, and the market is ready for smarter products. The underlying infrastructure in terms of processing and power consumption will inevitably follow.

The second clue as to why smartphones will soon become more intelligent is that many of our daily needs with respect to our smartphones can be handled using machine learning. The deep learning that requires tremendous computer power is not yet required on hand-held phones. We simply need more tasks to be automated and the system itself to be intuitive and ready to learn from us.

All of these different elements are finally coalescing to form the foundation for the world’s first Intelligent Phone.

This “Intelliphone”, as I call it, will be my personal secretary. It will grab bits of my daily schedule from emails, texts, calendar appointments, reminders and alerts and push them to the screen when required. It will be able to remind me to eat and drink water through the day. It will set the navigation on my car’s infotainment system based on the priority of chores I need to finish and errands I need to run. It’ll tell me when my boss has left the office. It’ll tell my home to turn on the heater before I get there.

Such an Intelliphone is not yet available, but we’re seeing some of these integrations via apps. I’m waiting for the day when all of these functionalities are hardwired into my device, not layered on top of each other the way they are now.

And this is only possible either through a deep collaboration of companies that are experts in their respective segments – or by a company that can make the whole package to ensure deeper integration of these functions into the very chipsets and operating systems that go into these devices.