A cognitive bias is an impediment to rational thinking that clouds judgement and may lead to an erroneous decision. In a sense, we all create our own “realities” based on our experiences, our (often) limited knowledge and even our memory of events, people and places. These realities, being relative rather than absolute, may result in wrong decisions, perception and judgement.

In machine learning, the data that is input is directly responsible for the resulting output. When that data contains biases, such as lacking coverage of a particular type or leaning heavily towards one specific type, the results are often skewed and inaccurate.

Several instances of data biases resulting in cognitive biases have been recorded, some more infamous than others.

The motive for that tweet was that the machine learning algorithms behind Google Photos were identifying this young woman’s face as that belonging to a gorilla. Google later apologized, saying it was “appalled and genuinely sorry,” and promised to fix the problem. But they never did – even three years down the road, as reported by Wired. What they did was simply remove the image categories for “gorilla,” “chimp,” “chimpanzee,” and “monkey”.

Cognitive biases happen all the time in the realm of human logic. If we had to process every single relevant piece of information pertaining to every decision we made, we’d never get anything done. What helps us make decisions quickly (and often erroneously) is something called heuristics.

Heuristics are essentially mental shortcuts comprising “simple, efficient rules” that people use to make decisions and form judgements. While they are mostly accurate, they can sometimes be completely off the mark.

Optical illusions make use of cognitive biases to fool the brain into thinking it’s seeing one thing, while the reality of it is completely different. Israeli-American economist and psychologist Daniel Kahneman, joint winner of the 2002 Nobel Memorial Prize in Economic Sciences, extensively studied these biases and the reasons for them.

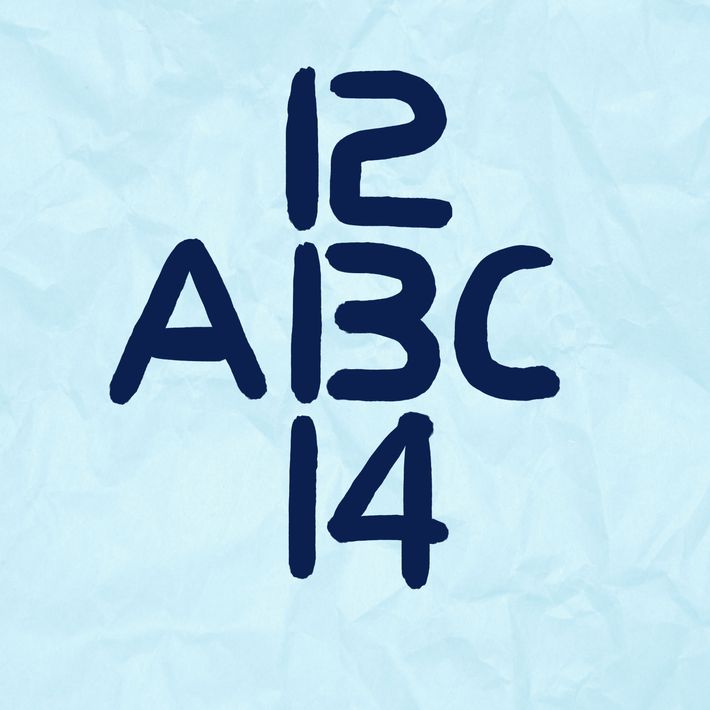

A simple example by The Cut shows this type of “cognitive illusion” in action. Consider the image below:

Whether you read the figure in the middle as a B or the number 13 depends on your interpretation. If you’re reading it left to right, your mind automatically uses a heuristic function to interpret it as a B. If you read it from top to bottom, it’s interpreted as the number 13.

Such biases are an integral part of human thinking. Although they are useful in terms of being able to filter out irrelevant information that would delay the making of a decision or the forming of a judgement, the criteria for deciding the relevance of specific information now comes to into focus. How are the various data points determined to be relevant or irrelevant to a particular decision-making process? That’s what heuristics are all about. We use these mental shortcuts to make better sense of the world, but the sense we make might not match absolute reality – or even reality as perceived by another human.

Machine learning is infused with such biases from the get-go, often because the biases of those training these models are carried over into variance – or lack thereof – in the training data. In other words, the biases in the data are often a reflection of the biases of the individual selecting the data for the training.

There are as many as 12 cognitive biases that have been studied extensively by psychologists. Here they are in no particular order.

Confirmation Bias – Humans love their physical comfort zones, but their mental comfort zones or their beliefs are often elevated to the level of sacredness. Confirmation bias happens when we interpret things to fit in with what we already believe. It becomes a further validation of our beliefs, strengthening them and cementing our perception of them as true reality.

Current Moment Bias – This is when short-term decisions overrule long-term thinking. Our need for instant gratification is related to this. We know that fruit is healthier than chocolate, for example, but one study by Read D., van Leeuwen B. in 1998 showed that while 74% of participants in a study chose fruit for their weekly diet, 70% of those same participants picked chocolate as their daily preference. In a sense, we’re willing to accept bad things in the future if we get good things now.

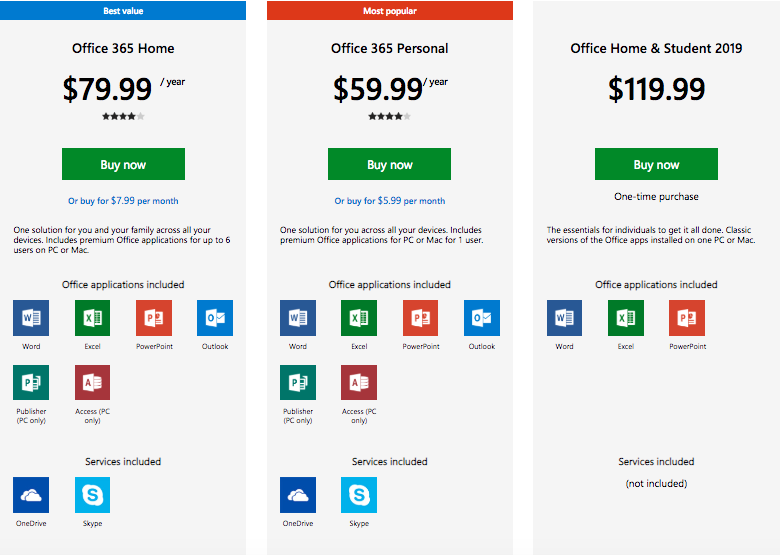

Relativity Trap or Anchoring Effect – This bias is used to great effect in sales and marketing. Imagine a smartphone priced at $1000 versus a different brand of smartphone with similar features priced at say $800. Most consumers would gravitate to the lower price without even realizing that $800 is still expensive for a smartphone. Marketers regularly exploit the relativity trap to get their prospects to choose what’s called “the middle option”, often showcasing it as the one offering the best value for money. Here’s an example:

So what is the “best value” in this scenario? The $79.99 seems to be the best and is highlighted as such. The $59.99 package is similar, but it’s only for a single user against six users for the $79.99 package. The $119.99 isn’t even highlighted, but look closer and you’ll see that it’s a one-time payment versus annual billing for the other two. There’s a good reason the “/year” is in really small font. They don’t want you to focus on that. This is a classic example of the relativity trap in action.

Projection Bias – It is in our nature to believe that most other people think like us. It is also in our nature to believe that they agree with us on most things. This type of bias arises from these beliefs, and is related to something called false consensus bias, where both these beliefs ‘collude’ – to use Trump’s favorite word – to make us think that others agree with us so we must be right.

Status Quo Bias – Nobody likes change, and most of us resist it at every turn. So, if there’s a line of thinking that supports the belief that “if it ain’t broke, don’t fix it,” then we’re succumbing to the status quo bias. This bias arises from our discomfiture at leaving our comfort zones. If there’s a way to keep on doing what we’re doing, we’ll take that route whether or not it represents absolute reality. The false assumption here is that any change will make things worse.

Bandwagon Effect – Related to the projection bias, this type of cognitive bias is based on our need to belong to a like-minded group. Call it peer pressure or hive mind mentality or whatever else you want, this bias arises from wanting to

Neglecting Probability – Why do we worry more about a terrorist attack than slipping and falling in the bathroom? It is the human habit of neglecting proven probabilities because we are unable to grasp the realities of risk and danger. That doesn’t mean we should overcompensate in one area and not worry about the other. It’s the lack of perspective that brings this bias into play. By neglecting to factor in the probability of one thing over another thing happening, we often put ourselves in unnecessary danger.

Negativity Bias – Why are newspapers full of bad news? It’s because the collective human consciousness focuses more on the bad and less on the good, and that helps sell more copies.

Gambler’s Fallacy – Take a simple coin toss. If the first three tosses are all heads, our expectation of tails in the next toss increases, and it keeps increasing with each heads that appears. The reason that’s a fallacy is that there’s actually no change with respect to probability. The chances of each toss ending up heads or tails are always equal – as long as the coin hasn’t been doctored, that is. It is merely our expectation that “things have got to go my way finally.” Someone who’s been married and divorced twice is convinced that the “third time’s a charm.”

Observational Selection Bias – If you buy a new BMW, you’ll suddenly start noticing a lot more BMWs on the road and might even think that more people have suddenly started to buy BMWs. What compounds this bias is that you’ll start noticing more of the same color or model of BMW. There’s no truth to that, of course, but it’s in our nature to assume that the things we notice are increasing in terms of frequency of occurrence. It’s our selection of what we notice that has changed, nothing more.

Post-purchase Rationalization – You know that the TV you bought is a piece of crap, but if anyone tries to attack it in front of others, you’ll be the first to jump to its defence. Scientists also call it the Buyer’s Stockholm Syndrome. We justify a bad decision simply because we want to remain committed to the decision to buy it in the first place. So we rationalize and convince ourselves (often to no avail) that we did, in fact, make the right choice. Also referred to as Sunk Cost Bias.

Ingroup Bias – This is one of the more dangerous kinds of bias because, while it gives us an inclusive mindset within the group, it makes us distrustful of anyone outside of that group. Cults are an extreme example of this, but such biases exist in all types of tight-knit groups like fraternities, clubs and so on. We place undue faith in the group, not realizing that we’re actually trusting individuals whom we might not even know that well. The faith in the group is thereby transferred to other members of the group, and anything the group believes is what you end up believing, even if it’s nowhere close to the truth.

Can Machine Learning Models Exhibit All These Cognitive Biases?

For the present, no. Some of these biases, as you’ll notice, are based on agreement between ourselves and other entities, and neural networks don’t yet have the ability to understand the representations of others, let alone being empathetic towards them.

The more common biases in machine learning have to do with wrongly recognizing patterns that aren’t there, as in the case of the young African-American lady who was tagged as a gorilla. I won’t say it’s a simple problem, because it isn’t. But it’s something we need to recognize in the early stages of training an ML model. Such biases can creep in very easily if we’re not watchful.

That’s why the training data is so important. Objectivity can be lost in an instant without realizing it. That being said, we’re never going to be bias-free when it comes to machine learning. As long as human biases exist, machine biases will, too.

Someday in the future, machines may figure out a way to weed out these biases. We have no way of knowing that, so that’s obviously an optimistic view. Just my own bias talking, I guess. 🙂

I’ll leave you with this…