Deep learning is often said to be modeled after the human brain. How true is that? The problem with humans is that we tend to generalize based on one or two common elements, and that might well be the case with artificial neural networks and deep neural networks. In practice, ANNs are extremely reductive versions of biological neural networks, which are greatly different from – and, infinitely more complex than – even the most complex deep neural nets.

Let me give you some examples of various definitions of neural networks that helped popularize the idea that artificial nets are similar to their biological equivalents. Here are some of the definitions of ANNs from highly respected websites. Bold lettering is ours, for emphasis.

“Artificial neural networks (ANN) or connectionist systems are computing systems inspired by the biological neural networks that constitute animal brains.” – Wikipedia

“An artificial neural network is an attempt to simulate the network of neurons that make up a human brain so that the computer will be able to learn things and make decisions in a humanlike manner.” – Forbes

“ANNs are a very rough model of how the human brain is structured.” – Medium

“Artificial Neural Networks are relatively crude electronic models based on the neural structure of the brain.” – University of Toronto

“As the “

“Neural networks are a set of algorithms, modeled loosely after the human brain, that

Right through all these definitions of neural networks runs a common thread suggesting that we’re a long, long way away from creating ANNs that are anywhere near as functional as an animal brain, let alone a human one. The average human neuron has as many as 1,000 to 10,000 connections with other neurons. That can go up to as much as 200,000 connections.

In stark contrast, the layers of an artificial neural net only connect to neighboring layers (Note: LSTM and recurrent networks can loosely mimic loops.) They also compute one layer at a time, unlike biological NNs, which can fire asynchronously and in parallel.

There are also other major differences between ANNs and BNNs, as listed below:

Size: ANNs typically have 10–1000 “neurons”, while BNNs have as many as 86 billion, with connections estimated at between 100 trillion and 1000 trillion (1 quadrillion.) The largest ANNs today have about 16 million neurons but, the larger they get, the harder they are to train. There are emerging methods like Sparse Evolutionary Training (SET) that allow for faster training speeds, which means you can build and train an ANN of up to 1 million neurons on a laptop with SET. This method was developed by an international team from Eindhoven University of Technology, University of Texas at Austin, and

Speed: In terms of signal speeds, biological synapses can fire up to 200 times per second, but ANNs do not have what are known as refractory periods, which occur when a new action potential cannot be sent because of sodium channels being locked out. Training and execution of a model can be made faster, but the calculation speeds of backpropagation and feedforward algorithms carries no information. In addition, continuous, floating point number values of synaptic weights are how information is carried over in ANNs, which contrasts with the way information is carried over in BNNs – by firing mode and frequency.

Topology: As we saw, ANNs compute layer by layer, with feedforward going sequentially one way through the layers and backpropagation computing weight changes the opposing way so the difference between the output layer’s computational results and expected results is minimized. ANN layers are usually fully connected, unlike biological NNs, which have fewer “hubs” (highly connected neurons) and a larger number of less-connected neurons. This allows BNNs to have small-world nature, which has to be mimicked in ANNs by introducing weights with 0 value to represent a non-connection between the artificial neurons.

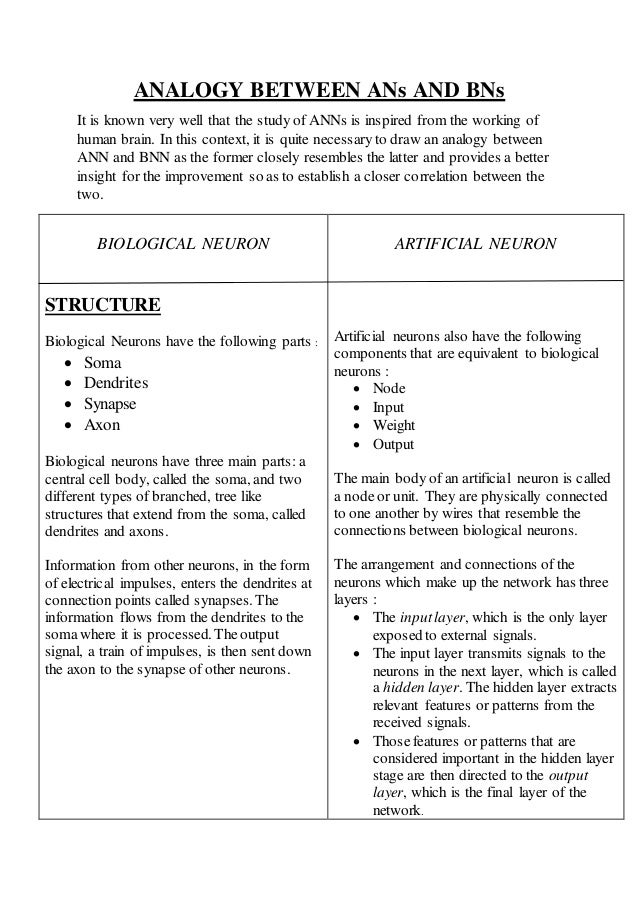

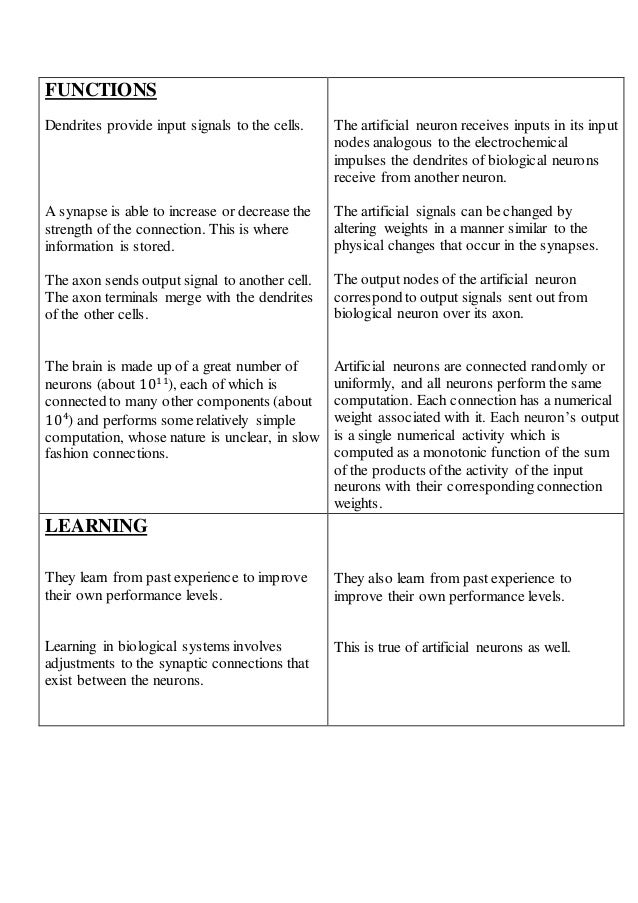

Despite these differences between artificial neural networks and biological neural networks, there are several analogies that have been drawn between the two types of neurons involved. Here are some comparisons, courtesy Saransh Choudhary, SoC Design Engineer at Intel Corporation.

So, there are several differences as well as similarities between artificial and biological neural networks, but the fact remains that artificial neural nets are far more primitive than their biological equivalents.

That being said, is it really necessary for an ANN to be “just like” a BNN for it to be useful to humans? Hardly. The specific functionality that an ANN offers is often far superior to anything a human can do. This has been proven over and over in the fields of computer vision, natural language processing and many more.

While it seems to be a given that artificial neural nets are nowhere near as complex as human or animal brains, they offer real-world value that is becoming more indispensable by the day. So many areas of human endeavor have been enhanced radically by ANNs and DNNs that there’s no going back.

So, going back to our original question: can deep learning be given a boost with more brain-like structures? It’s possible, although it might be out of reach until methodologies like SET become more popular. However, the real question is whether or not that’s actually necessary. Often, the human equivalents of the animal and plant kingdoms are far more useful in the having than in the not having.

In support of this assumption:

“Birds have inspired flight and horses have inspired locomotives and cars, yet none of today’s transportation vehicles resemble metal skeletons of living-breathing-self replicating animals. Still, our limited machines are even more powerful in their own domains (thus, more useful to us humans), than their animal “ancestors” could ever be.” – Nagyfi Richárd in TowardsDataScience

On the one hand, while it’s definitely plausible that more brain-like structures can give a boost to deep learning, the training challenges currently make it prohibitive. Meanwhile, developments in deep learning are certainly proving their worth in the real world. Is that a trade-off we should consider being happy with for now?