The DARPA Grand Challenge, subsequently reborn as the Urban Challenge, the Robotics Challenge and the FANG Challenge, has always been about pushing the boundaries of technology. Whether it’s about autonomous vehicles racing through the desert or robots working in dangerous environments, the core objective has been to achieve the impossible – or, at the very least, the highly improbable.

This year, the DARPA Grand Challenge brings machine learning to the forefront, but on a real-time basis. The Real-time Machine Learning program, which puts DARPA and the National Science Foundation in partnership, is about “the creation of a processor that can proactively interpret and learn from data in real-time, solve unfamiliar problems using what it has learned, and operate with the energy efficiency of the human brain.” Funding is set at $10 million for the winner.

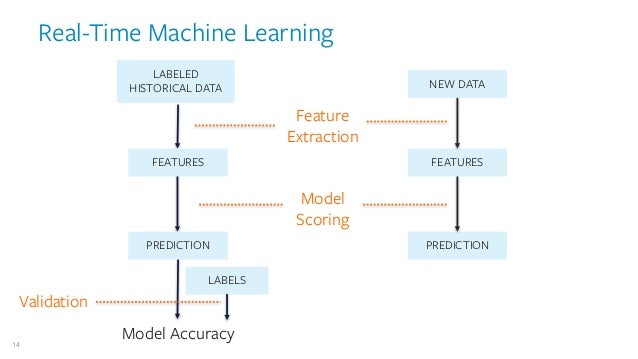

What is Real-Time Machine Learning?

Real-time machine learning is not a new field, but it’s mostly limited to speech recognition and computer vision. Some of the other applications include credit card approval at checkout time, which usually has to happen within a few seconds, recommendation engines with real-time inputs, routing in logistics and transportation, etc.

True real-time learning involves removing the bottlenecks that are traditionally present in today’s processing, such as ETL (extract-transform-load) batch processes that invariably render the data obsolete even before the analysis can take place.

One solution to this problem of processing bottlenecks is the use of in-memory computing integrated with multilayer perceptron deep learning features. This can typically by scaled up for operational data sets in the petabytes. One such system is the GridGain Continuous Learning Framework, which is intended for companies that don’t have massive budgets for machine learning projects. It is built on Apache Ignite, which includes an in-memory database, in-memory data grid and streaming analytics. It accelerates NoSQL and relational databases, and provides high availability and horizontal scalability while being consistent with distributed SQL.

This particular DARPA Grand Challenge is tasked with finding such real-time machine learning systems, but those that are essentially built from the ground up and can offer low-power and lightweight alternatives for the same kind of real-time machine learning capabilities.

The challenge is split into two 18-month phases. Phase I hopes to eliminate the often prohibitive costs of application-specific ICs by creating hardware compilers that will allow ML-specific chips to be automatically created from high-level source code, while Phase II calls for two demo applications to be supported by the hardware optimization developed in Phase I.