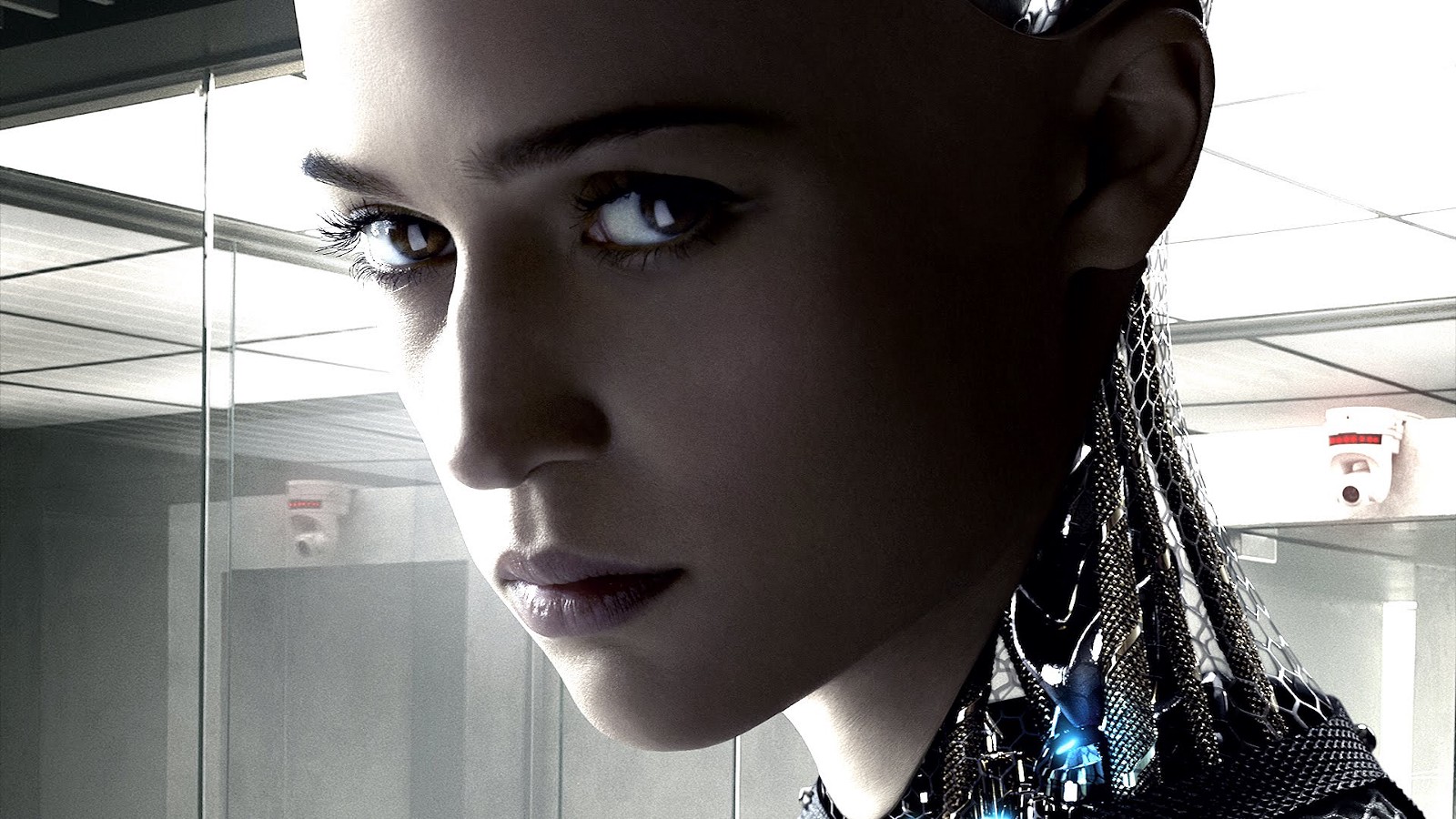

Every AI researcher’s dream is to be the first to develop an artificial intelligence entity that is capable of doing everything an average human can. The problem is, the “average” for a human is actually super-human, and largely unachievable at this point in time for a machine no matter how “intelligent” it is. Rather than get lost in the semantics of intelligence and what it means, let us go even further back and look at it from a POV of consciousness as defined by modern-day psychology.

What is Consciousness?

Esoteric interpretations aside, consciousness is defined by most psychologists as an individual or collective human experience. The memory of your first taste of ice cream, the awareness of owning your body, the knowledge of love versus the experience of falling in love, the bond between the victims of

Most experts agree that consciousness undeniably exists at the individual, group and universal levels. On the contrary, some still maintain that consciousness is an illusion ill-suited to scientific scrutiny. Consider this comment from Christof Koch in the Scientific American.

“Many modern analytic philosophers of mind, most prominently perhaps Daniel Dennett of Tufts University, find the existence of consciousness such an intolerable affront to what they believe should be a meaningless universe of matter and the void that they declare it to be an illusion. That is, they either deny that qualia exist or argue that they can never be meaningfully studied by science.”

While we’re not here to argue the case for consciousness, we do want to iterate what we mean by consciousness from an AI angle. As applicable to artificial intelligence entities, it is the ability to form representations of themselves as well as external entities such as other living and non-living beings. We need not necessarily extend that definition to include innately human traits such as emotion, but an awareness of such traits is certainly required.

So, what will happen to the human race once AI achieves this type of consciousness? The moment itself is referred

There are a number of ways to look at this phenomenon and its potential impact on human life and civilization as we know it. One way is to see how it might impact various areas of existence.

Economic and Commercial Impact

A super-intelligent AI system will have high impact in this area because of another irksome but useful thing called automation. The more processes are automated, the less work there will be for humans. Until now, we believe that automation will lead to human jobs that require greater skills, and that this problem can be addressed by altering our education system and focusing on skills that humans will need in a highly automated environment.

If singularity happens, that’s a moot point because, by definition, a super-intelligent entity will be able to surpass any human at any endeavor. This could have a disastrous effect on the core macroeconomic infrastructures that comprise our global commercial superstructure. There will be tectonic shifts in a system that is already delicately balanced.

Security Impact

When super-intelligent systems take over security and weapons systems across cyberspace, geospace and space itself (CGS), humans will have little control over these realms. The supercomputers will decide on escalations unsupervised, and they might just decide one fine day that the world is better without a human population.

Will they then start exterminating the parasitic race that plagues the earth so that computers and robots can better manage the future of the planet? It’s clear that humans now realize that we are incapable of taking care of this ball of dirt hurtling through space. How long do you think it will take a super-intelligent entity to arrive at that same conclusion and look at wiping out the human race as a viable solution to the earth’s resource problems.

The Final Frontier for Humans: The Challenge of Handling AI

We don’t even need to look beyond the impact in these two areas because they will lead to total loss of control by humans. This is the vision of bestsellers and Hollywood producers, and it no longer represents merely entertainment value. These are real-world outcomes with a high degree of probability if AI singularity does, in fact, happen.

This is why it is vital that we put a muzzle on AI research while it’s still possible. The many tools available to the average researcher are astounding as it is, and it’s only a matter of time before someone develops a self-learning AI entity that is as capable as a human brain, if not more.

Rather than trying to study all the ways in which AI can impact our future in a negative way, we need to look at mitigatory measures to ensure that we never get to such a future. Global ethical standards that are inviolable should be our prime area of focus, and any AI system found to transgress such standards must be swiftly nipped in the bud.

No doubt that’s a massive challenge, but do we really have a choice about whether or not to take it up? The only alternative is an unimaginable one where humans are eventually ‘phased out of the equation.’

A collective and immediate push by the world’s academia, governments

We can find middle ground. It’s not impossible no matter how gargantuan the task might seem at this point. The sad truth is, we’re nowhere near creating a global human group that can effectively address the matter at hand. A fragmented effort, even one executed by large entities, will always fall short of what’s actually required. We don’t need NGOs to warn us of the horrendous nature of uncontrolled AI proliferation. What we need is decisive planning, action and enforcement by the world’s most powerful nations.

And therein lies the real challenge. Can we get all the world’s super-powers under one roof to work on a global plan to tackle the AI menace? What will it take to make that happen – a major AI fallout that wipes out the human population of an entire country? We certainly hope not.