Social media networks seem to be increasingly coming under pressure to monitor and manage their content. First it was fake news, after that it was terrorism content on Facebook, then suicidal posts and video content – again, on Facebook – and now it’s Twitter trying to curb abusive tweets on its microblogging platform.

Earlier this week we reported that Facebook had begun to explore the use of artificial intelligence to identify suicidal behavior on Facebook Live, its live video streaming service, as well as improve flagging options on Facebook Messenger.

Facebook Using Artificial Intelligence to Identify and Flag Suicidal Behavior

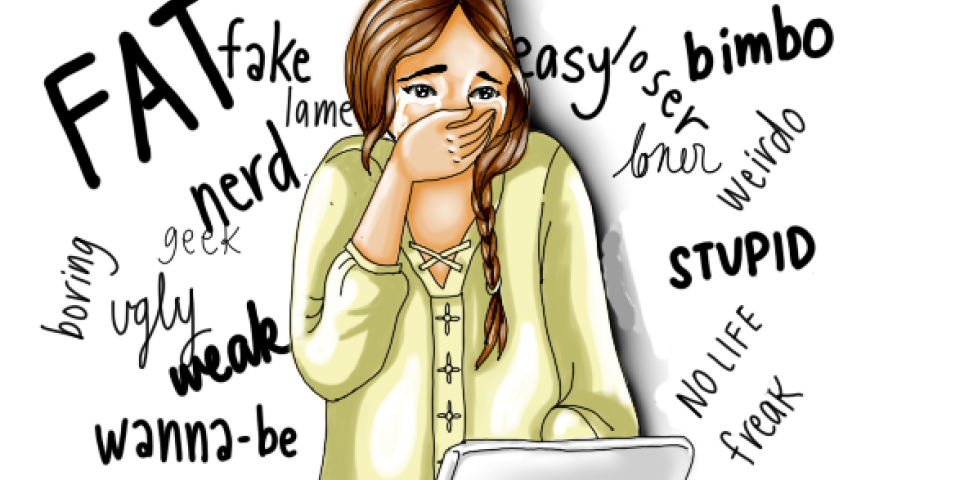

Now it’s Twitter’s turn, but the issue is one of abusive tweets against its users. User harassment is a growing problem, especially among teenagers, but also affects millions of adults all over the world. Cyberbullying, though addressed early on, is still rampant on social media networks like Facebook, Instagram and Twitter.

As a proactive step towards fighting abusive content, Twitter has released yet another update that makes it easier to identify abusive tweets and tweeters. The update comes nearly three months after Twitter CEO Jack Dorsey called for feedback on how to improve the social platform.

Twitter has already made three significant changes to its platform that it hopes will help reduce the negative impact of abusive content. In November 2016, it added tools for users to gain more control over bullying, harassment and abusive content. Last month, Twitter introduced additional options for flagging offensive content.

The new changes will automatically collapse tweets that it identifies as being potentially abusive. In addition, steps are being taken to prevent banned/suspended users from creating fresh accounts. We’ll also be seeing a “safe search” feature that hides certain flagged results or tweets from suspended accounts when you do a search.

Neither automation nor manual intervention nor even crowd-sourced flagging is going to eliminate abusive content. The word ‘abusive’ itself is open to interpretation, so an objective approach cannot be used to block, mute or remove such content.

However, with more measures in place, Twitter can effectively begin to fight the bulk of abusive content that targets specific groups of people. The new features will be rolled out to users in the coming days and weeks.

Nobody can wipe the slate clean and make sure that only positive content that is not offensive to anyone is posted on Twitter. With more than 300 million users, that’s impossible. But we applaud Twitter’s efforts towards making the social microblogging service a safer place to visit.

A Positive Outlook for Social Media

All the major social media platforms now have control systems in place for users to flag undesirable or offensive content.

In addition, automation and artificial intelligence are becoming the new weapons of choice against harmful posts and tweets.

Together, it’s possible that a large portion of such content can be kept at bay.

There’s a long way to go, but at least these companies are taking the right steps in the right direction.

Thanks for reading our work! Please bookmark 1redDrop.com to keep tabs on the hottest, most happening tech and business news from around the world. On Apple News, please favorite the 1redDrop channel to get us in your news feed.