Canadian computer scientist Yoshua Bengio is considered by many to be one of the three Godfathers of Deep Learning, along with Geoff Hinton and Yann LeCun, Facebook’s VP & Chief AI Scientist. So the world sits up and takes notice when he expresses concern over technologies that he helped advance being used by the Chinese government for surveillance and control over its citizens.

What’s Happening in China?

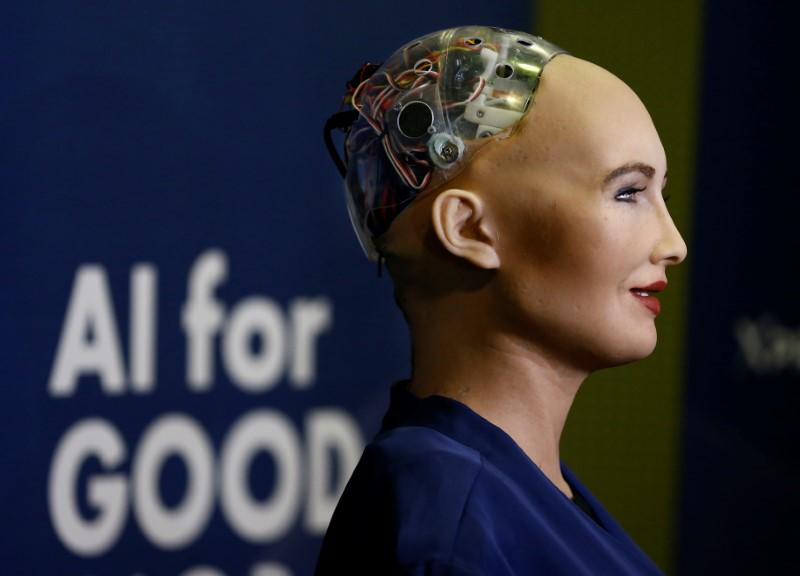

Face recognition is not new, but recent advances have made it more accurate than ever, and Bengio’s work has contributed a lot to that. China is now reportedly using face recognition techniques along with CCTV surveillance to see if it’s citizens are breaking laws ranging from political dissent to much simpler “crimes” like jaywalking.

Bengio finds this to be a “1984 Big Brother scenario,” a reference to the Orwellian concept of omnipresent government surveillance. He says, “The use of your face to track you should be highly regulated.”

But the godfathers of deep learning are clearly not in agreement with each other on how AI research should be directed. While LeCun has chosen the cozy offices of the world’s largest social media company to operate out of, Bengio himself is a humble academician, college professor and and head of the Montreal Institute for Learning Algorithms, or Mila. Bengio claims that some large companies may need to change the way they work with respect to AI development.

Bengio’s concerns about China’s use of AI are echoed by the likes of billionaire investor and philanthropist George Soros, who recently decried the risk to minority rights and civil liberties in China in his speech to the World Economic Forum on Jan 24, 2019.

The Abuse and Misuse of Artificial Intelligence

The abuse of AI is not necessarily a new phenomenon for us, but it might as well be, considering we have little in the way of guidelines and frameworks to prevent it.

Cybercriminals use machine learning and deep learning models to mount smarter and more automated attacks than ever; autonomous military weapons are being developed every day by the most powerful countries on the planet; and ethic-less AI research continues unabated in college laboratories, at AI startups and in private research facilities owned by large corporations. But we are yet, as a global community, to develop a framework of positive direction for AI research.

Of course, that’s not to say that initiatives aren’t forthcoming. There are any number of formal organizations and loosely associated groups that are making an effort to control the viral proliferation of AI development.

But among the chief hurdles to these efforts is the fact that large corporations often develop commercial AI technologies that serve their top or bottom-line needs in the short term, but open up a nasty Pandora’s Box of ugly possibilities that their tech can be misused for in the long run.

Examples of misuse could be as simple as SAT and GRE essays being graded by machine learning algorithms, riddled and skewed with bias, or as complex as using existing deep learning models to create genuine-looking spam emails carrying deadly payloads. The abuse and misuse of AI is already prevalent, if not rampant. What’s going to happen when it eventually and inevitably gets to that point?

That’s what Bengio, Soros, Musk, Gates and Stephen Hawking, among others, have worried about. It is the indiscriminate development of AI applications without realizing their implications, and the improper use thereof.

What we hold in our hands is a double-edged sword. A many-edged sword, to be more accurate. It cuts several ways, just like a surgeon’s scalpel can be used to save a life, enhance a life or take a life.

Of course, we must recognize that there’s really no guarantee that an AI bible will be the panacea to all these issues, but it will draw the line between good AI and bad AI in terms of how it’s used rather than what it is. It’s the use that matters. What China is misusing as a means to incriminate its citizens is a convenience and security measure on an average smartphone.

This wise/unwise use of AI is not something that can be documented as a flow chart and applied as a mandate to government and private research. If the wrong application of such technology is the problem, then the source of the problem is not the technology itself, but rather its implementation – how it is used.

Put simply, you can’t ban AI just because it can be misused. That would be like banning guns in America because of (more than) a few mass killings involving firearms. Interesting parallel, isn’t it?

The Right to Bear AI is an unwritten amendment, so we’re not getting rid of it in a hurry. But the direction it is taking in China is causing ripples around the world because of one thing: it implies that any government that condones monitoring its citizens is going to explore AI as a logical option, whether it’s for civil surveillance, maintaining law and order, preserving national security or engaging in international espionage.

What is happening in China could happen under any extremist regime, left or right. AI technology will keep getting better, and since who has access to the technology matters more than the technology itself, the more accessible it becomes the more potentially dangerous it becomes.

Very few modern societies around the world fear guns the way Americans fear them, and one of the reasons why that may be is because legally purchasing firearms is merely a question of due process. That same due process has put deadly weaponry into the hands of people who weren’t capable of using it responsibly. The number of mass shootings in America over the past several decades is

AI looks like it’s headed in that direction as well. The only difference between AI and guns is that there are very few good things that guns can do. With AI, the potential for good is unlimited. That may be its saving grace. Not that it needs one, to be honest, because AI is here to stay. We just have to find a way of restricting its use.

The starting point of that would be something like a Geneva Convention for AI, a global forum where the world’s most powerful countries pledge to follow strict international policies that are apolitical in nature and have the single objective of protecting humanity from the negative impact of abused or misused artificial intelligence technologies.

The Parisian climate agreement might have delivered far below expectations, but an AI agreement on a similar scale must be iron-clad, with severe penalties for policy violations by member nations, and strict sanctions against erring non-members.

In addition, punitive action must be brought to bear against individual and corporate violators, as well as misdirected academicians looking to solve creative problems with potentially destructive deep learning models.

It would further benefit the cause if independent, third-party regulators or overseers were brought into the picture to offer a more balanced approach to policing the AI landscape.

And it looks like such a collaboration is long overdue. The revelation of China’s use of AI should be a wake-up call to global leadership. Oh, wait, Trump’s still in the White House. Darn, there goes any attempt at a global consensus. Nevertheless, the remainder of the developed world must come together and champion this indispensable and increasingly urgent agenda item.