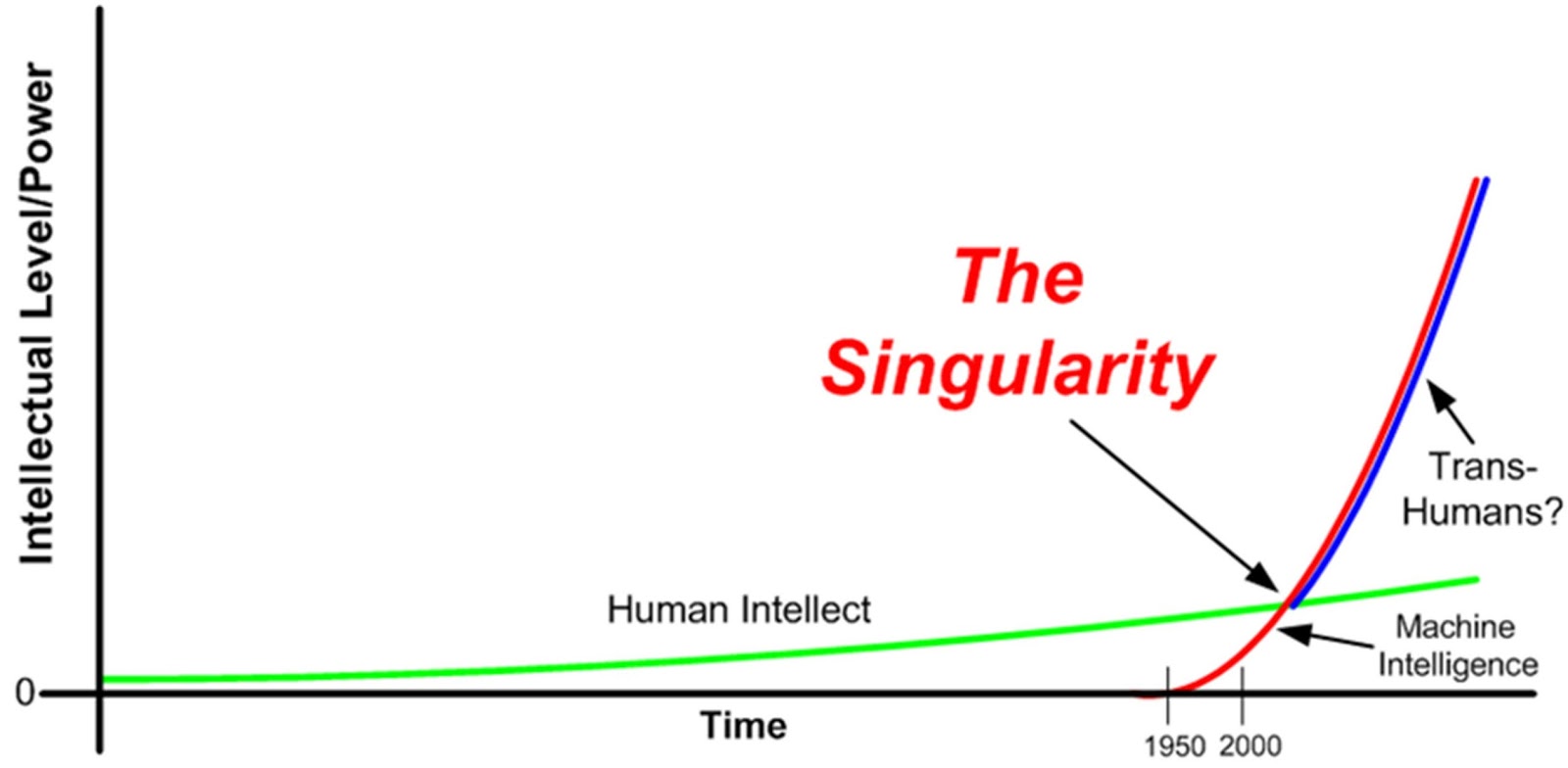

In the field of artificial intelligence, or AI, The Singularity – or technological singularity – refers to a point in time when ASI, or artificial superintelligence, will trigger an explosion of technology developments driven by machines that will change the world as we know it.

How Will The Singularity be Achieved?

Although there are several definitions of the singularity, most agree that it will begin with an artificial general intelligence (AGI) entity that can improve on itself without human intervention. Its evolution into a more powerful agent, an ASI, will cause an “intelligence explosion” that notable personalities like Tesla CEO Elon Musk and physicist Stephen Hawking have surmised could eventually lead to the extinction of the human race. By the way, if this topic gets too heavy for you, take a break and get yourself a Matchbook bonus code 2020 edition and enjoy fantastic online gaming discounts.

As far back as 1965, British mathematician and cryptologist Irving John Good, who worked alongside Alan Turing during World War II, propounded the concept of intelligence explosion. Here’s how he defined it:

“Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an ‘intelligence explosion,’ and the intelligence of man would be left far behind.”

The scenario he paints is very bleak. Essentially, the first supercomputer capable of “recursive self-improvement” could be the last invention of mankind. The machine would then either create machines with greater capabilities than itself or re-write its own programming to become infinitely smarter over time.

Such a computer or entity would not only be smarter than the average bear, but smarter than the smartest human being who ever lived.

Predictably, there has been much disagreement about how the singularity might be triggered. Some purport that it will be a pure-machine entity, while others say humans themselves might find a way to amplify their own intelligence.

One hypothesis suggests a process called Whole Brain Emulation (WBE), where humans with “upload their brain” into a computer that will simulate a virtual world based on the brain’s model.

If you think that’s far our, consider that Purdue University has already found a way to get AI entities to read the human mind using something called Functional Magnetic Resonance Imaging, or fMRI. The fMRI images are interpreted by the computer, and it was able to accurately decode the information, or “see” what the subjects were seeing.

The approach by the Purdue researchers involves using a form of deep learning algorithm known as convolution neural networks to study how the brain processes images, even moving images from a sample of nearly 1000 videos.

In short, it was able to read the mind of at least three humans involved in the experiment.

Remember the famous Facebook Smart Bot experiment? Two AI bots were made to chat with each other after being shown several instances of humans negotiating over chat. The result was that the bots started communicating in a strange new language that only they could understand. The experiment was abandoned, but by then the bots had concluded their negotiations. Nobody knows what the outcome was.

Such developments show that we seem to be on the fringes of discovering the root of superintelligence, which will eventually lead to the singularity. That may not happen for several decades yet, but there are several similar instances of machines doing things that humans are unable to understand.

How Will The Singularity Change the World?

The end effect of the singularity is that human civilization will be changed forever, but there’s a lot of different scenarios that could play out in the event that such a thing should happen.

Hungarian-American mathematician John von Neumann, who first used the term ‘the singularity’ in the technological context, said that “human affairs, as we know them, can not continue” in a post-singularity world.

The late Stephen Hawking, in a Reddit AMA on artificial intelligence, said when AI entities start designing better AI entities than humans can, it “ultimately results in machines whose intelligence exceeds ours by more than ours exceeds that of snails.” This is the intelligence explosion that I. J. Good talked about.

Visionary British mathematician Alan Turing, in a paper dated 1951, wrote this:

“There would be no question of the machines dying, and they would be able to converse with each other to sharpen their wits. At some stage therefore we should have to expect the machines to take control.”

One theory propounded by roboticist Hans Moravec of Carnegie Mellon University suggests that robots will become a new artificial species of beings, and that it could come as quickly as the 2030s or 2040s. He suggests that AI entities will be successors to human beings.

Others take it several steps further and say that man will be made extinct by machines that no longer find us useful to their own existence, and thereby systematically eradicate the human race if they consider us a threat to their future.

Elon Musk, in an interview with CNBC a few years ago, said this:

“There are huge existential threats, these are threats to the very survival of life on Earth, from machine intelligence.”

The Deliberate and Malicious Use of Artificial Intelligence

A more immediate threat than the singularity, which by all estimates is anywhere between 30 and 1000 years away (not a typo), is the deliberate use of AI with malicious intent.

Autonomous weaponry immediately comes to mind, but there are other ways in which AI can be used for political and other gains.

Consider this AI video experiment showing a fake Obama that looks like a genuine BBC News report.

Malicious use of bots to create fake social media stories, misuse or weaponizing of unmanned drones, highly targeted spam or phishing emails, automated hacking and other activities are already possible – and occurring on a near-regular basis.

Bad actors are already employing artificial intelligence tools for phishing, spearphishing and whaling. According to Lee Kim, Director of Privacy & Security at HIMSS:

“AI-fueled techniques are lower cost, higher accuracy, with more convincing content, better targeting, customization and automated deployment of phishing emails.”

These are clear and present dangers that have nothing to do with the singularity and everything to do with human weaknesses that can be exploited through the use of present-day artificial intelligence.

Some like AI researcher Andrew Ng believe that worrying about the singularity is like “worrying about overpopulation on Mars” when even the simplest AI algorithms are able to wreak havoc on human society today, not half a century from now.

Although the singularity is certainly a point of concern from a big picture perspective, as it should be, it is the immediate dangers from unregulated AI research and the wide availability of AI tools that we should be thinking about.

In an effort to speed up the evolution of AI, companies like Google, IBM and Amazon make AI tools easily available to anyone. Do they have adequate controls to ensure that malicious programs aren’t being developed on their respective AI platforms?

Most people are aware of Isaac Asimov’s Three Laws of Robotics that were originally written more than 75 years ago:

-

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

-

A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

-

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

But are you aware that there is a fourth law? Actually a Zeroth law, also propounded by Asimov:

-

A robot may not harm humanity, or, by inaction, allow humanity to come to harm.

Are these laws valid or even relevant after more than three-quarters of a century? Some say no. According to Ulrike Barthelmess and Ulrich Furbach at the University of Koblenz in Germany, such laws are not required because our basic fear of robots arises not from the machines themselves, but other humans misusing them for malicious intent.

While that does make sense, the very definition of singularity precludes any involvement by human beings once the event itself occurs. In a post-singularity world, there’s no question of humans employing machines for malicious acts because it is machines themselves that will eventually be controlling themselves.

In conclusion (is there really a conclusion?), while it is certain that the singularity will forever change the world, it appears that there’s nothing we can do about it. Global organizations are being set up to try and control the proliferation of AI research by issuing guidelines not unlike Asimov’s laws. Even the United Nations has set up a panel to control the proliferation of “killer robots.”

But is this going to be effective in any way? With all the open source AI tools we already have at our disposal, have we inadvertently started the snowball rolling to an inevitable though unpredictable – and even unfathomable – end?

Sadly, this might be the stark reality that we seem to deliberately be ignoring because AI promises so many benefits to mankind.