Artificial neural networks, or ANNs, are essentially frameworks for machine learning algorithms to learn without the help of rules for specific tasks. They are modeled after natural neural networks found in animal and human brains, but ANNs aren’t algorithms by themselves. They merely play host to ML algorithms that then learn with the help of training datasets, but no rules that are specific to the training data.

As an example, an artificial neural network system may learn to interpret images of a dog without being given the attributes of a dog. They’re trained with images that are labeled as “dog” or “no dog”, and subsequently learn to identify images of dogs on their own. All this without being told that dogs have a particular anatomy or physical characteristic.

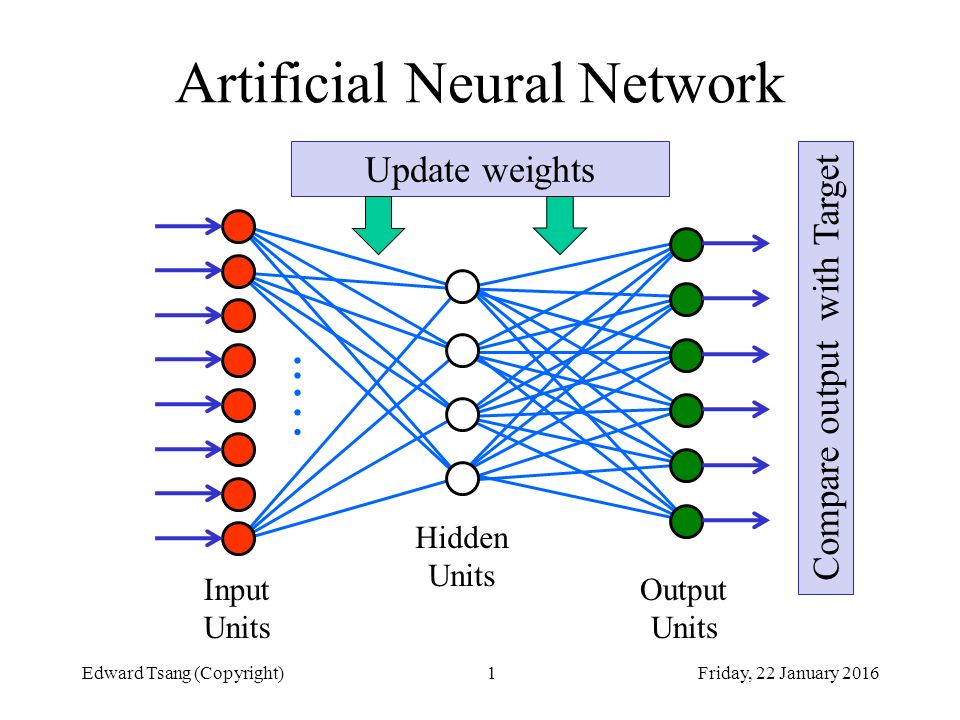

The network itself is comprised of artificial neurons, which are interconnected nodes. The information is passed from one node to another at the “edge”, and these edges have “weights” that can control the strength of the signal at the edge. In some implementations of ANNs, there may be a threshold that dictates when a signal should be passed to the next artificial neuron.

Although originally intended to approach problem-solving in a way similar to a biological brain, ANNs have become more task-specific. Some of the areas of implementation include medical diagnosis, computer vision, filtering social networks, machine translation and speech recognition.

Most neural networks today are designed as feedforward networks, and typically use backpropagation to control the values of the weights connecting the units of the network. In this manner, the gap between actual output and the desired output is gradually reduced until the system does exactly what it’s supposed to, such as identify whether or not a picture has a dog in it.

The possible use cases for neural networks is tremendously vast. Such a well-trained system might be used to detect quality anomalies in production, to auto-pilot an aircraft, to decide loan eligibility, to detect potential financial fraud and so on. The applications are limitless even though ANNs don’t really behave like the human brain. This task-specific evolution of neural networks has allowed it to be used in numerous industries for a variety of applications.

One of the frontrunners in developing artificial neural networks is Google, now an entity that falls under the Alphabet umbrella of companies. Google’s achievements in this space are well-known and often highly publicized. The company also offers a platform called TensorFlow, which is essentially a machine learning library of open-source software that can be used to built neural nets.

More recently, Google open-sourced GPipe, a library for more efficient training of DNNs, or deep neural networks. According to Google AI software engineer Yanping Huang:

“Deep neural networks (DNNs) have advanced many machine learning tasks, including speech recognition, visual recognition, and language processing. ver-larger DNN models lead to better task performance and past progress in visual recognition tasks has also shown a strong correlation between the model size and classification accuracy. GPipe … we demonstrate the use of pipeline parallelism to scale up DNN training to overcome this limitation.”

The secret to GPipe’s success is in the way it achieves better memory allocation for machine learning models. As an example, GPipe allows for 318 million parameters per accelerator core against 82 million parameters without it. With GPipre, training efficiency, accuracy and speed are increased manifold, which is the ideal scenario for efficiently training large-scale deep neural nets.